Abstract

Purpose

Artificial intelligence, specifically large language models such as ChatGPT, offers valuable potential benefits in question (item) writing. This study aimed to determine the feasibility of generating case-based multiple-choice questions using ChatGPT in terms of item difficulty and discrimination levels.

Methods

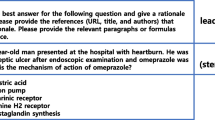

This study involved 99 fourth-year medical students who participated in a rational pharmacotherapy clerkship carried out based-on the WHO 6-Step Model. In response to a prompt that we provided, ChatGPT generated ten case-based multiple-choice questions on hypertension. Following an expert panel, two of these multiple-choice questions were incorporated into a medical school exam without making any changes in the questions. Based on the administration of the test, we evaluated their psychometric properties, including item difficulty, item discrimination (point-biserial correlation), and functionality of the options.

Results

Both questions exhibited acceptable levels of point-biserial correlation, which is higher than the threshold of 0.30 (0.41 and 0.39). However, one question had three non-functional options (options chosen by fewer than 5% of the exam participants) while the other question had none.

Conclusions

The findings showed that the questions can effectively differentiate between students who perform at high and low levels, which also point out the potential of ChatGPT as an artificial intelligence tool in test development. Future studies may use the prompt to generate items in order for enhancing the external validity of the results by gathering data from diverse institutions and settings.

Similar content being viewed by others

Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

Buchholz K (2023) Infographic: ChatGPT sprints to one million users. In: Statista infographics. https://www.statista.com/chart/29174/time-to-one-million-users. Accessed 28 Apr 2023

Masters K (2023) Ethical use of artificial intelligence in health professions education: AMEE Guide No.158. Med Teach 45:574–584. https://doi.org/10.1080/0142159X.2023.2186203

Floridi L, Chiriatti M (2020) GPT-3: its nature, scope, limits, and consequences. Mind Mach 30:681–694. https://doi.org/10.1007/s11023-020-09548-1

Cotton DRE, Cotton PA, Shipway JR (2023) Chatting and cheating: ensuring academic integrity in the era of ChatGPT. Innovations in Education and Teaching International 1–12. https://doi.org/10.1080/14703297.2023.2190148

Masters K (2019) Artificial intelligence in medical education. Med Teach 41:976–980. https://doi.org/10.1080/0142159X.2019.1595557

Zhang W, Cai M, Lee HJ et al (2023) AI in medical education: global situation, effects and challenges. Educ Inf Technol. https://doi.org/10.1007/s10639-023-12009-8

Ouyang F, Zheng L, Jiao P (2022) Artificial intelligence in online higher education: a systematic review of empirical research from 2011 to 2020. Educ Inf Technol 27:7893–7925. https://doi.org/10.1007/s10639-022-10925-9

Zawacki-Richter O, Marín VI, Bond M, Gouverneur F (2019) Systematic review of research on artificial intelligence applications in higher education – where are the educators? Int J Educ Technol High Educ 16:39. https://doi.org/10.1186/s41239-019-0171-0

Gilson A, Safranek CW, Huang T et al (2023) How does ChatGPT perform on the United States medical licensing examination? The implications of large language models for medical education and knowledge assessment. JMIR Med Educ 9:e45312. https://doi.org/10.2196/45312

Kung TH, Cheatham M, Medenilla A et al (2023) Performance of ChatGPT on USMLE: potential for AI-assisted medical education using large language models. PLOS Digit Health 2:e0000198. https://doi.org/10.1371/journal.pdig.0000198

Carrasco JP, García E, Sánchez DA et al (2023) ¿Es capaz “ChatGPT” de aprobar el examen MIR de 2022? Implicaciones de la inteligencia artificial en la educación médica en España. Rev Esp Edu Med 4:55–69. https://doi.org/10.6018/edumed.556511

Wang X, Gong Z, Wang G et al (2023) ChatGPT performs on the chinese national medical licensing examination. J Med Syst 47:86. https://doi.org/10.1007/s10916-023-01961-0

Alfertshofer M, Hoch CC, Funk PF et al (2023) Sailing the Seven Seas: a multinational comparison of ChatGPT’s performance on medical licensing examinations. Ann Biomed Eng. https://doi.org/10.1007/s10439-023-03338-3

Mihalache A, Huang RS, Popovic MM, Muni RH (2023) ChatGPT-4: an assessment of an upgraded artificial intelligence chatbot in the United States Medical Licensing Examination. Medical Teacher 1–7. https://doi.org/10.1080/0142159X.2023.2249588

Kurdi G, Leo J, Parsia B et al (2020) A systematic review of automatic question generation for educational purposes. Int J Artif Intell Educ 30:121–204. https://doi.org/10.1007/s40593-019-00186-y

Falcão F, Costa P, Pêgo JM (2022) Feasibility assurance: a review of automatic item generation in medical assessment. Adv in Health Sci Educ 27:405–425. https://doi.org/10.1007/s10459-022-10092-z

Shappell E, Podolej G, Ahn J et al (2021) Notes from the field: automatic item generation, standard setting, and learner performance in mastery multiple-choice tests. Eval Health Prof 44:315–318. https://doi.org/10.1177/0163278720908914

Westacott R, Badger K, Kluth D et al (2023) Automated item generation: impact of item variants on performance and standard setting. BMC Med Educ 23:659. https://doi.org/10.1186/s12909-023-04457-0

Pugh D, De Champlain A, Gierl M et al (2020) Can automated item generation be used to develop high quality MCQs that assess application of knowledge? RPTEL 15:12. https://doi.org/10.1186/s41039-020-00134-8

Kıyak YS, Budakoğlu Iİ, Coşkun Ö, Koyun E (2023) The first automatic item generation in Turkish for assessment of clinical reasoning in medical education. Tıp Eğitimi Dünyası 22:72–90. https://doi.org/10.25282/ted.1225814

Gierl MJ, Lai H, Tanygin V (2021) Advanced methods in automatic item generation, 1st edn. Routledge

Cross J, Robinson R, Devaraju S et al (2023) Transforming medical education: assessing the integration of ChatGPT into faculty workflows at a caribbean medical school. Cureus. https://doi.org/10.7759/cureus.41399

Zuckerman M, Flood R, Tan RJB et al (2023) ChatGPT for assessment writing. Med Teach 45:1224–1227. https://doi.org/10.1080/0142159X.2023.2249239

Kıyak YS (2023) A ChatGPT prompt for writing case-based multiple-choice questions. Rev Esp Educ Méd 4:98–103. https://doi.org/10.6018/edumed.587451

Han Z, Battaglia F, Udaiyar A et al (2023) An explorative assessment of ChatGPT as an aid in medical education: Use it with caution. Medical Teacher 1–8. https://doi.org/10.1080/0142159X.2023.2271159

Lee H (2023) The rise of ChatGPT : exploring its potential in medical education. Anatomical Sciences Ed ase.2270. https://doi.org/10.1002/ase.2270

Tichelaar J, Richir MC, Garner S et al (2020) WHO guide to good prescribing is 25 years old: quo vadis? Eur J Clin Pharmacol 76:507–513. https://doi.org/10.1007/s00228-019-02823-w

Tatla E (2023) 5 Essential AI (ChatGPT) Prompts every medical student and doctor should be using to 10x their…. In: Medium. https://medium.com/@eshtatla/5-essential-ai-chatgpt-prompts-every-medical-student-and-doctor-should-be-using-to-10x-their-de3f97d3802a. Accessed 18 Sep 2023

Downing SM, Yudkowsky R (2009) Assessment in health professions education. Routledge

Brown T, Mann B, Ryder N et al (2020) Language models are few-shot learners. In: Larochelle H, Ranzato M, Hadsell R et al (eds) Advances in neural information processing systems. Curran Associates, Inc., pp 1877–1901

Indran IR, Paramanathan P, Gupta N, Mustafa N (2023) Twelve tips to leverage AI for efficient and effective medical question generation: a guide for educators using Chat GPT. Medical Teacher 1–6. https://doi.org/10.1080/0142159X.2023.2294703

Acknowledgements

We express our gratitude to the medical students who participated in this study.

Author information

Authors and Affiliations

Contributions

Conceptualization: Yavuz Selim Kıyak, Özlem Coşkun, and Canan Uluoğlu. Methodology: Yavuz Selim Kıyak, Özlem Coşkun, and Işıl İrem Budakoğlu. Data collection: Özlem Coşkun and Canan Uluoğlu. Statistical analysis: Yavuz Selim Kıyak. Writing—original draft preparation: Yavuz Selim Kıyak. Writing—review and editing: Işıl İrem Budakoğlu, Özlem Coşkun, and Canan Uluoğlu.

Corresponding author

Ethics declarations

Ethics approval

The study was performed in accordance with the ethical standards as laid down in the 1964 Declaration of Helsinki. This study has been approved by Gazi University Institutional Review Board (code: 2023-1116).

Consent to participate

Informed consent was obtained from the data owners.

Competing interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Kıyak, Y.S., Coşkun, Ö., Budakoğlu, I.İ. et al. ChatGPT for generating multiple-choice questions: Evidence on the use of artificial intelligence in automatic item generation for a rational pharmacotherapy exam. Eur J Clin Pharmacol 80, 729–735 (2024). https://doi.org/10.1007/s00228-024-03649-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00228-024-03649-x