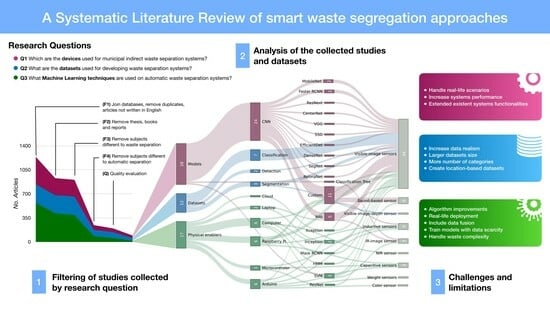

This section provides a review of the collected studies and datasets. It begins by presenting the responses to the three research questions, followed by an overview of the results.

3.1. Physical Enablers

Automatic waste sorting machines are devices that feed, classify, and separate waste automatically. They can be classified according to their level of automation:

- (i)

Full automation:system automatically seeks, classifies, and separates waste. A robot using IR cameras, proximity sensors, and robotic arms identifies objects on the ground to this end [

34].

- (ii)

Moderate autonomy: The system classifies and separates the waste. Nevertheless, the feeding is performed by the user. Two different layouts can be observed:

- (a)

Continuous feeding: A conveyor belt ensures the waste is always sensed in the same spot. Sensing is performed using visible-image-based sensors (most common) [

9,

35,

36,

37,

38,

39], inductive and capacity sensors [

8], near-infrared (NIR) sensors [

35], and weight sensors [

40]. Subsequently, the waste is classified and segregated towards the corresponding container using sorting arms [

9,

35], pneumatic actuators [

36], servomotors [

8,

38,

40], or falling on an inclined platform [

39]. This is the most popular system layout, proposed in 8 of 17 articles.

- (b)

Manual feeding: The user deposits the pieces of waste, one at a time, to be sensed by the device. Visible-image-based [

6,

7,

41] and sound-based sensors [

12], as well as inductive and capacitive sensors [

42], are used for sensing. Afterwards, a gravity-based mechanism is used to perform the separation.

- (iii)

Low autonomy: The user is responsible for the feeding and separation of the waste. The system identifies the waste and guides the user to deposit it in the correct container by opening the corresponding lid to indicate where to deposit it [

10,

43]. Waste identification is performed with image classification [

10], radio-frequency identification (RFID) [

43], or the sound generated by the trash bags [

44].

Figure 2 shows the sensors used. The first eight sensors are used for the waste classification task, and the other five are used for complementary functionalities. For waste classification, the sensors found can be categorized into (i) image-based sensors: visible, IR, NIR, and color sensors, (ii) sound-based sensors, (iii) inductive sensors, (iv) capacitive sensors, and (v) weight-based sensors.

Image sensors are the most common type of sensor used in waste sorting devices, appearing in 13 of 17 devices. They are often used alone, but there has been some research on combining multiple image sensors. For example, visible-image-based sensors can be used for general waste classification (e.g., glass or plastic), and, combined with near-infrared (NIR) sensors, they can be used in applications where more detailed categorization is required (e.g., PET, PS, PE, and PP) [

35]. Regarding the other types of sensors, three combinations are identified: (i) capacitive sensor with inductive sensor [

8,

42,

43], (ii) weight-based sensor with ultrasonic sensor [

40], and (iii) sound-based sensor with inductive sensor [

44].

Figure 2 shows the distribution of the computing devices used according to each type of sensor. Both local and cloud computing are used, with local computing being the most frequently mentioned in the literature analyzed. All types of computing devices are used to process data from image-based sensors. Inductive and capacitive sensors, however, are specifically used with edge computing devices.

Of the 17 articles reviewed, 12 used machine learning (ML) models to identify waste, typically using image-based or sound-based sensors. The remaining five articles present systems that did not use ML models but relied on sensor measurements to directly discriminate between materials (e.g., capacitive, inductive, or near-infrared sensors). Only one study directly used visible-image-based sensor input to classify waste. This input came from reading the barcode of the products [

35].

The most common complementary functionalities are trash-can-level detection [

6,

10,

38], abnormal gas detection [

10], a web platform [

6,

10,

43], or a mobile application [

38].

Figure 3 shows the implementation context of the sorting machines described in the different studies. The context refers to the environment in which the device has been used. There are three types of contexts reported in the literature. Laboratory refers to a device tested under a controlled environment, a prototype is a device whose materials and functionality are the same as the device on production, and on context is a device tested in a real production environment. It is evident that the vast majority (76%) of the sorting devices are evaluated in a laboratory environment with controlled variables.

Some limitations of the systems reviewed in relation to their use are that 82% of the systems can only dispose of one piece of waste at a time and can only be used in controlled environments (noiseless or simple backgrounds). In addition, waste must meet specific conditions (such as having a volume, being an empty package, or existing in the database) and, in some cases, must be positioned correctly on the sensors.

3.2. Datasets

Datasets are critical in waste identification, as most of this process relies on ML models. These models are trained on large datasets of labeled waste images, or other data, to identify the underlying patterns that relate the inputs (e.g., images of waste) to the expected outputs (e.g., labels of the waste type). This review studies 39 datasets, half of which are publicly available (

Table 4). In half of the datasets, the authors recorded the observations, and the other half were collected from the internet by labeling web images or extending public datasets. The datasets can be grouped by their label information into three categories: single-label classification (64% of the datasets), detection with bounding boxes (23%), and pixel segmentation (13%). Visible-based images were the most used input data (34 datasets). Other used sensors were sound-based (three datasets), IR-based (two), RGBD-based (Red Green Blue Depth) (one), and inductive sensors (one). Only two datasets use sensor fusion: the work presented in [

45] that combines visible-based images with sound and the work presented in [

44] that uses the impact of trash bags with inductive sensors.

Figure 4 shows the distribution of dataset labels. The first ring of the figure presents the material categories. Almost 80% of the dataset labels belong to seven categories (plastic, metal, paper, glass, organics, compounds, and cardboard). The remaining 20% correspond to less common object classes and materials (e.g., ceramics, e-waste, or recyclable). The second and third rings of the figure consist of object classes (e.g., bottle, battery, or can), material subcategories (e.g., Aluminum, PET, or PE), product brands [

6], material colors [

46], material properties (e.g., high-density polyethylene) [

15], and object classes of a specific material (e.g., metal–aluminum–can).

Almost a third (28.9%) of the dataset’s labels are of plastic materials. The most popular plastic categories are plastic bottles (eight) and plastic bags (seven), and the most common plastic subcategories are PET and PE [

13,

15,

41]. Plastics are also classified by color: bottles are classified as blue, green, white, or transparent [

6], PET is classified as blue, dark, green, multicolor, teal, or transparent [

46]; and tableware is classified as green, red, or white) [

6]. In the metals category, aluminum is the only material subclass. Aluminum has multiple object categories, such as blisters, foil, and cans, among which cans are the most common objects (15 labels). In addition, aluminum cans present an additional classification of product brands [

6].

Figure 5 presents the dataset sizes (number of observations) versus the number of separation categories. In the figure, blue represents datasets for classification, red is used for detection, and green is for segmentation. The median of the dataset sizes is 4.288 observations. The median of the sorting categories is five. The datasets, regardless of label types (classification, detection, and segmentation), present a similar median number of categories (

). However, regarding the number of observations in segmentation, the median is almost double (7.212) compared to detection and classification datasets. Moreover, the number of observations decreases when the number of categories increases. In addition, most datasets (65%) present an unbalanced distribution of observations by categories.

The waste observation datasets come from municipal sources and are collected from four different environments: (i) general (15 datasets): objects in their context before being discarded or the acquisition setup is not for a specific machine or experiment. (ii) On-device (12): tailored to a specific sorting machine or experiment. (iii) Indoors (10): taken inside institutions or households. (iv) On-wild (3): waste is thrown away on streets or in nature. Although observations come from different environments, 62% of the datasets were taken with simple backgrounds (without noises, no cluttered surroundings, or other elements). For the rest (15 datasets), the observations include their context, and only one used augmented backgrounds [

9] (see

Figure 6).

The geographic location of 31 out of 39 datasets is unknown (not specified by the authors), and 7 of them are composed of data from multiple locations. Of the datasets with known locations (eight datasets), four of them are public: Poland [

15], Milan/Italy [

6], Novosibirsk/Rusia [

46], and Crete/Greece [

9]. The rest of the known location datasets are privately built for ML model studies and are from Shanghai/China [

7], Kocaeli/Turkey [

12], Taiwan [

11], and Rajshahi/Bangladesh [

41].

The most popular public dataset is Trashnet [

16], which is a six-category classification dataset with a simple background. Additionally, Trashnet has been modified by adding detection [

11,

47,

48] and segmentation labels [

49]. In second place is Taco [

18], a segmentation dataset with COCO format labels [

50] that currently contains 28 categories and 60 subcategories. Taco is continuously growing through a website (

http://tacodataset.org, accessed on 1 June 2022).

Table 5 summarizes state-of-the-art results on these public datasets.

Table 4.

Public datasets for waste separation. Dataset annotation types: classification (Classf), segmentation (Segm), and detection (Detec). The label’s distributions (Dist.) for categories of the datasets are balanced (Baln) or unbalanced (Unbl). In the categories column, the first number is the main categories and the second is the number of subcategories.

Table 4.

Public datasets for waste separation. Dataset annotation types: classification (Classf), segmentation (Segm), and detection (Detec). The label’s distributions (Dist.) for categories of the datasets are balanced (Baln) or unbalanced (Unbl). In the categories column, the first number is the main categories and the second is the number of subcategories.

| Year | Categories | Context | Studies | Size | Annotation | Location | Dist. | Ref |

|---|

| 2021 | 5–39 | On-device | [6] | 3126 | Classf | Italy | Baln | [6] |

| 2017 | 6 | General | [11,17,38,47,48,49,51,52,53,54] | 2527 | Classf | - | Unbl | [16] |

| 2021 | 3 | On-device | [55] | 10,391 | Classf | - | Baln | [55] |

| 2019 | 6 | Indoors | [56] | 2437 | Classf | - | Unbl | [57] |

| 2019 | 3 | General | [58] | 2751 | Classf | Mixed | Unbl | [58] |

| 2020 | 3 | Municipal | [59] | 25,000 | Classf | - | Unbl | [60] |

| 2018 | 8–30 | General | [15,61] | 4000 | Classf | Poland | Unbl | [15] |

| 2019 | 2 | General | [17] | 25,077 | Classf | - | Unbl | [62] |

| 2020 | 3 | General | - | 27,982 | Classf | - | Unbl | [60] |

| 2022 | 7–25 | - | - | 17,785 | Classf | - | Unbl | [14] |

| 2021 | 12 | General | - | 15,150 | Classf | - | Unbl | [63] |

| 2021 | 3–18 | Indoors | - | 4960 | Classf | - | Baln | [64] |

| 2021 | 4 | General | [9] | 16,000 | Segm | Greece | Baln | [9] |

| 2020 | 28–60 | On-wild | [18,37,65,66] | 1500 | Segm | - | Unbl | [18] |

| 2020 | 22–16 | Underwater | [67] | 7212 | Segm | - | Unbl | [67] |

| 2020 | 1 | Indoors | [68] | 2475 | Segm | - | - | [68] |

| 2019 | 4–6 | On-device | [46] | 3,000 | Detec | Rusia | Unbl | [46] |

| 2021 | 1 | Aerial | [69] | 772 | Detec | - | - | [69] |

| 2021 | 4 | General | [17] | 57,000 | Detec | - | Unbl | [17] |

| 2020 | 4 | Indoors | - | 9640 | Detec | - | Unbl | [70] |

Table 5.

Machine learning studies that used public datasets sorted by main performance metrics (: dataset modified with different classes, annotation, or extended with more data). The metrics used are average accuracy (Acc.) for classification models, mean average precision (mAP) for detection models, and interception over union (IOU) for segmentation models.

Table 5.

Machine learning studies that used public datasets sorted by main performance metrics (: dataset modified with different classes, annotation, or extended with more data). The metrics used are average accuracy (Acc.) for classification models, mean average precision (mAP) for detection models, and interception over union (IOU) for segmentation models.

| Dataset | Study | Type | Architecture | Backbone | Extension | Acc. (%) | mAP (%) | IOU (%) |

|---|

| Trashnet | [51] | Classf | Resnext50 | Resnext | TL | 98 | - | - |

| [38] | Classf | Resnet34 | Resnet | TL | 95.3 | - | - |

| [53] | Classf | Custom (CNN) | Resnext | TL | 94 | - | - |

| [52] | Classf | Custom (CNN) | Googlenet, Resnet50, Mobilenet2 | - | 93.5 | - | - |

| [54] | Classf | Custom (SVM) | Mobilenet2 | - | 83.5 | - | - |

| [47] | Detec | SSD | MobileNet2 | TL | - | 97.6 | - |

| [48] | Detec | Yolo4 | DarkNet53 | - | - | 89.6 | - |

| [11] | Detec | Yolo3 | DarkNet53 | - | - | 81.6 | - |

| [49] | Segm | Segnet | VGG16 | - | - | - | 82.9 |

| Taco | [66] | Detec | RetinaNet | Resnet | - | - | 81.5 | - |

| [65] | Detec | Yolo5 | CSPdarknet53 | - | 95.5 | 97.6 | - |

| [37] | Detec | Yolo4 | CSPdarknet53 | TL | 92.4 | - | 63.5 |

3.3. Machine Learning

Convolutional neural networks (CNNs) were the most common machine learning (ML) models used for waste identification in the reviewed studies, accounting for 87% of the models. Support vector machines (SVMs) were used in three studies, hidden Markov models (HMMs) in one study, and classification trees in one study. More than half (24 of 37) of the studies were published from 2021 onwards.

In this review, an ML architecture refers to the overall configuration of the ML technique that defines its components, parameters, etc. (e.g., number of layers, size, and neuron type for an ANN). An ML model is an instance of an architecture whose parameters are learned for a specific dataset. The feature extractor, or backbone, is a process performed in the ML models to transform the raw inputs into a lower-dimension representation (features), preserving relevant information [

71]. Finally, fine-tuning refers to the reuse of an existing model parameter value (usually a feature extractor) that has been pretrained on a more extensive and general dataset to improve its performance or overcome scarce training data in another dataset [

72].

The datasets used to develop the ML models are of two types: image-based (visible and IR) and sound-based. Image-based models have been trained to accomplish three types of ML tasks: (i) Single-label classification (20 of 37 models): the models predict the class of the waste present in an image; this task only accepts one piece of waste per image. (ii) Bounding box detection (used on 13): the models predict the enclosing box and class of all the wastes present on the image. (iii) Pixel segmentation (4 models): each pixel of the input image is classified as either background or a type of waste. However, sound models have only been used for single-label classification tasks.

The visible-image-based sensor was the most commonly used (31 out of 37 studies), followed by the IR-image-based sensor (3 studies). The remaining studies use a single sound-based sensor (one), a sound-based sensor with visible-image-based sensors (one), or an inductive sensor (one) (see distribution in

Figure 7).

Only two studies propose using a data fusion of sounds generated by falling objects with images (visible-image-based and sound-based sensors and sound-based and inductive sensors). The study presented in [

45] aims to address problems in image-based models when different materials have similar appearances and in the sound-based model of mixed wastes, which generates indistinguishable sounds. The authors proposed a method that extracts visual features with a

pretrained VGG16 model [

73] and acoustic features with a 1D (dimensional) CNN, which are later fused into a fully connected (FC) layer for waste classification. The study presented in [

44] uses the impact sounds of trash bags with an inductive sensor input to detect the presence of glass and metals. The model used three variations of a basic CNN architecture (convolutional layers followed by FC layers). The models’ input data comprised Mel spectrograms, Mel frequency cepstral coefficients (MFCCs), and the metal detector frequencies.

The most popular public datasets are Trashnet [

16] and Taco [

18]. The mean of the average accuracy (Acc.) of the classification models on the Trashnet dataset was 92.9%. The study with the top Acc. (98%) on Trashnet for waste classification used a ResNext architecture [

74] with transfer learning (TL) with a backbone pretrained on ImageNet [

75] that was trained with data augmentation (e.g., rotations, shifts, zoom, or sheer) [

51].

Other studies used a modified version of Trashnet; thus, their results can be numerically compared (see the studies of

Table 5 with an

mark). In [

47], a single shot detector (SSD) [

76] that uses MobileNetV2 [

77] as a feature extractor has been fine-tuned on Trashnet and annotated with bounding boxes, excluding the “trash” category. Likewise, [

11] studied the use of YoloV3 [

78] to detect waste. The authors found that training a detection model on a single object dataset (as Trashnet) was unsuitable for this type of ML task and that the used datasets need to be location-tailored. Similarly, [

48] used a modified version of Trashnet for training a YoloV4 [

79] detection model. The authors found that their models had an optimal performance when trained using mosaic data augmentation (combining four images in one). Additionally, [

49] proposed a method for binary image segmentation (waste, no waste) based on a SegNet architecture [

80] using Trashnet images. However, the proposed method has problems with low contrast between the waste and the background. Only one study [

54] used SVM on the Trashnet dataset. The model used MobileNetV2 as a feature extractor and fed an SVM classifier, which allows it to be embedded in mobile applications due to it having fewer parameters and operations.

Regarding studies that use the Taco dataset, [

65] used an extended version of Taco to train a model based on YoloV5 with Darknet53 as the backbone [

81]. The study found that YoloV5 performs better than its older versions and is suitable for embedded devices due to its size. Similarly, [

37] proposed using YoloV4 with a tailored dataset that combines Taco with images from a recycling facility sorting machine. Their proposed model could accurately detect real-world live video feeds of wastes in a conveyor belt. The main disadvantages were the hardware costs and energy consumption. The study [

66] proposed a RetinaNet [

82] model with ResNet50 [

83] as a backbone for detection of floating waste in the oceans as well as general waste. The used dataset consisted of a subset of 369 Taco images relabeled.

We found 13 architecture types using 14 feature extractors or backbones in the surveyed studies.

Figure 8 shows the relationship between the feature extractors (on the right) and the architecture types (on the left). The most common approach was to propose a custom architecture. A total of 33% of the CNN models used a custom architecture prevalent in the classification models (8 of 20 studies). Of the custom architectures, only two are not image-based and use sensor fusion [

44,

45].

Most of the studies used standard feature extractors as part of their models. The most common (in 10 of 37 studies) feature extractor was ResNet [

83]. ResNet was mainly used in the detection models (7 of 14) together with other architectures used less commonly than Yolo, like Faster RCNN [

46,

84], Efficientdet [

85], custom architectures [

17,

86], Retinanet [

66], and Centernet [

87]. Additionally, Darknet variations (Darknet and CSPdarknet) were the second most used feature extractors because they are the default backbone of Yolo architectures. Regarding classification models, there was more variation in the feature extractors with the two most popular (used four times each) being VGG [

7,

45,

88] and Mobilenet [

54,

55,

58].

The most common patterns found in the reviewed articles with custom architectures were the following:

- (i)

A standard feature extractor with a tailored head. The study [

7] uses a semantic retrieval model [

89] placed on top of a VGG16 model to perform a four-category mapping of the 13 subcategories returned by the CNN model. Their results revealed that the proposed method achieved a significantly higher performance in waste classification (94.7% Acc.) compared to the one-stage algorithm with direct four-category predictions (69.7% Acc.). The study [

52] proposes the ensemble of three classification models (InceptionV1 [

90], ResNet50, MobileNetV2) trained separately. Their predictions are integrated using weights with an unequal precision measurement (UPM) strategy. The model was evaluated on Trashnet (93.5% Acc.) and Fourtrash (92.9% Acc.). Ref. [

53] proposed DNN-TC, which adds two FC layers to a pretrained ResNext model. DNN-TC was evaluated on Trashnet (94% Acc.) and their dataset VN-trash (98% Acc.). Ref. [

56] proposed IDRL-RWODC, a model composed of a mask region-based convolutional neural network (RCNN) [

91] model with DenseNet [

92] as a feature extractor that performs the waste image segmentation and passes to a deep reinforcement Q-learning algorithm for region classification. IDRL-RWODC was evaluated (99.3% Acc.) on a six-category dataset [

57]. Ref. [

17] developed a multi-task learning architecture (MTLA), a detection architecture with a ResNet50 backbone on which each convolutional block is applied to an attention mechanism (channel and spatial). The feature maps are passed to a feature pyramid network (FPN) with different combination strategies. The architecture was tested on the WasteRL dataset with nearly 57K images and four categories (97.2% Acc.).

- (ii)

The improvement of an existing architecture. Ref. [

39] presented GCNet, an improvement of ShuffleNetV2, by using the FReLU activation function [

93], a parallel mixed attention mechanism module (PMAM), and ImageNet transfer learning. Ref. [

94] presented DSCR-Net, an architecture based on Inception-V4 and ResNet that is more accurate (94.4 Acc.) than the Inception-Resnet versions [

95] in a four-waste custom classification dataset.

- (iii)

New architectures. Ref. [

61] proposes using a basic CNN architecture on RGB images for plastic material classification (PS, PP, HDPE, and PET). They used the WadaBa dataset [

15], a single piece of waste per image on a simple black background. Their model had a lower performance (74% Acc.) than MobileNetV2 but half the number of parameters, making it appropriate for portable devices (e.g., Raspberry Pi).

The second most used architecture type was Yolo’s version. Yolo is a general-use architecture proposed for waste image detection that was used in 5 of the 14 models reviewed related to the detection task. YoloV3 was used to detect six waste classes of a Taiwan-sourced dataset (mAP. 92%) and was also evaluated on the TrashNet dataset for detection (mAP. 81.4%) [

11]. YoloV4 detected four waste categories in the TrashNet-based dataset (glass, metal, paper, and plastic) [

48]. Similarly, Ref. [

96] uses YoloV4 but added “fabric” to the classes to detect (mAP 92.2%). Their model had better detection accuracy than the single shot multibox detector (SSD) and the Faster R-CNN models. Another study with YoloV4 [

37] uses an RGB-IR camera and an extended version of the Taco dataset. Further, Ref. [

65] used YoloV5 to detect the 60 classes of the Taco dataset, reaching 95.49% Acc. and an mAP of 97.62%. Other standard architectures used for detection were Faster RCNN [

46,

84], RetinaNet [

66], CenterNet [

87], and SSD [

47].

Regarding pixel segmentation, three of the four studies use a CNN. The most common (used in two studies) architecture was Mask R-CNN [

9,

56]. Ref. [

49] uses SegNet architecture with VGG16 as a feature extractor for segmenting an image into waste and no waste. In addition, Ref. [

13] used classification trees for plastic materials segmentation.

CNNs have achieved high success in image recognition tasks, surpassing other ML methods on several benchmarks. This is due to their ability to learn spatial features and patterns in images using a hierarchical architecture of layers that perform convolution operations and extract features at different levels of abstraction [

97]. CNNs dominated the reviewed studies; only four presented different ML model types, and, among them, the most common was the SVM, which was used in three studies. The study [

34] proposed the use of IR images to extract SURF features [

98] that are mapped into a bag-of-words vector that is used in an SVM for the classification of waste into three categories (aluminum can, plastic bottle, and tetra), reaching 94.3% Acc. Ref. [

54] fed an SVM with the features extracted by a fine-tuned Mobilenet model with RGB images. The approach was evaluated on Trashnet (83.46% Acc.). Finally, Ref. [

12] investigated the use of sound for the volumetric packaging classification of four materials (glass, plastic, metal, and cardboard), each with three different sizes, except metal packages with only two sizes. They developed two classification models based on an SVM and HMM [

99] with MFCC features. Both models reached 100% Acc. on material classification and 88.6% Acc. on material and size classification. Additionally, Ref. [

13] uses near-infrared hyperspectral imaging to classify six recyclable plastic polymers used for packaging. Their approach is based on a hierarchical classification tree composed of PLS-DA (partial least squares discriminant analysis) models. Their model was evaluated on a custom setup dataset with an overall result of 98.4% mean recalls.

Over half (21 articles) of the reviewed studies used TL, where the feature extractor was pretrained on a large-scale dataset (usually ImageNet). During training on the target dataset (containing the waste identification task), the feature extractor (or part of it) is “frozen”, and its parameters are not modified. Other less frequently explored aspects of ML techniques were also considered, such as hyper-parameter optimization [

56], k-fold cross validation [

84], and ablation experiments [

39]. Additionally, style transfer [

87] and synthetic data [

9] were used for increasing training data.

The models’ performance regarding the number of categories is shown in

Figure 9. The most commonly used performance metrics were average accuracy (Acc.) for classification models, mean average precision (mAP) for detection models, and average recall for segmentation (Av. Rec.). Although the most commonly used metrics were selected, it is worth noting that in certain studies the model was not assessed using these particular metrics. Consequently, not all of the reviewed studies could be included in this analysis. The overall median performance of the models reviewed is 92.3%. For classification (21 studies), the median average accuracy is 94.7%, and the median number of classification categories is 4.5. For detection models, 14 studies were considered; the median mAP was 82.8%, and the median number of categories was five. Only three studies were considered for segmentation, with a mean Av. Rec. of 98% for six median categories. It is worth highlighting that one study [

12] reached an Acc. of 100% using sounds for a four-material classification task. The median number of categories of all the reviewed models was 5, and the maximum was 204 classes. The study with this number of classes used the 2020 Haihua AI Challenge dataset. The authors developed a method for waste detection based on a cascade RCNN with a cascade adversarial spatial dropout detection network (ASDDN) and an FPN. This method was developed to enhance the model’s performance, particularly in handling small objects and dealing with occlusions. Their proposal reached an mAP of 62%, revealing that the number of observations of the dataset was not enough, and had trouble with unbalanced categories [

86].

Data augmentation has also been reported for training waste identification models to overcome the shortage of data. Ref. [

100] proposes using deep convolution generative adversarial networks (DCGANs) to generate synthetic samples with real ones to train a YoloV4 detector model. As a result, using data augmentation improves the mAP by 4.54% compared to using only real samples. Similarly, [

101] uses a generative adversarial network (GAN) to generate new images from TrashNet and GP-GAN [

102] to generate collages from overlapping and no overlapping thrash objects. The authors evaluate waste classifiers using transfer learning from pretrained ImageNet models. The new images generated by the GAN could not be used due to a lack of details, and the inclusion of collages yielded no improvement to the classification models.

Finally, according to the confusion matrix and the evaluation per class presented in the reviewed studies, the complex categories for the models are identified based on the two lower-scored categories on either of the two metrics. The two most challenging categories, metal and trash, were each referenced in seven articles.

3.4. Overview of Results

The three reviewed areas, physical enablers, datasets, and ML algorithms, are closely related. ML models are trained and evaluated on datasets, and the type of data that both use depends on the sensors used to capture waste in the identification systems on segregation devices. The three areas and their underlying technology present interrelated connections, as seen in

Figure 10. From left to right, the first three branches indicate the number of studies of each research question. The middle of the figure presents the models/techniques (in purple), processing devices (in green), and contexts in which the datasets have been used (in blue). Finally, the sensors used are presented.

The distribution of studies on these three areas is as follows: 47% corresponds to ML models, 31% to systems or machines, and the rest (22%) to datasets.

The CNN was the most common ML technique (24 out of 26 articles concerning ML models). However, the proposition of custom model architecture prevails (11 studies) over standard state-of-the-art ones. Also, for the feature extractors, the popular choice was to use standard ones, among which the most popular was ResNet [

83], which was used in 10 studies.

The most common prediction task of the ML models was image classification (20 studies), followed by object detection (13 studies) and, finally, image segmentation (4 studies). On image classification, the models were used to predict if a material is present on the image [

10,

51,

61,

103], or an object type [

7,

39], or if there was an object that belongs to the recycling category [

53,

58,

94]. Detection models were used to predict the rectangular area (bounding box) where an object was located on the image and their material type [

13,

56,

84,

87,

96] or the object type [

65]. Lastly, segmentation models classify each image pixel as a specific material type [

9,

56] or as waste or background [

49].

Most of the proposed models use visible-image-based sensor data (45), except for a few studies that used sound-based (4) and infrared-based sensor data (3). Thus, vision models were by far the preferred choice. Other technologies, like inductive, capacitive, and weight technologies, are also used as direct input for sorting the waste [

8,

40,

43].

Datasets are closely related to ML models because they are required for training and evaluating their performance. However, only 12 of 39 are for general usage instead of built for a model or system study. The “general-purpose” datasets are image-based, and the most common type of labeled dataset was for image classification.

Moreover, when considering all the studies, the main categories are (see

Figure 11) plastic (12.83%), glass (10.62%), metal (10.62%), and paper (9.29%)). Additionally, there are more than 50 categories. Some studies classify waste by more specific types of objects (e.g., plastic bottles) [

36] or brands (e.g., Heineken Beer Bottle) [

6]. Other studies categorize objects by their type of processing after disposal (e.g., recyclable, organic, or hazardous) [

7].

The reviewed studies proposing indirect sorting machines (17) fall into two categories. In the first one, waste is directly separated in the consumer place. Nearly half of the studies (10) belong to this category. In the second one, the waste is separated in a centralized location. The three more common processing devices found were computers, Raspberry Pi, and Arduino (

Figure 10). The systems present more variability regarding the sensing devices for sorting (eight types identified) than ML model studies, although, like in ML models and datasets, visible-image-based sensors are the most used.